Overview of Testing Tools

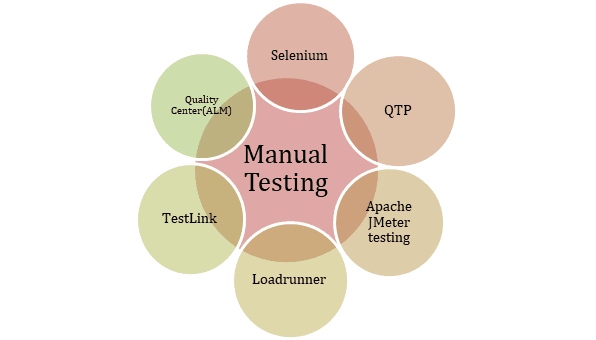

The realm of software testing tools underwent significant changes, reflecting the evolving demands of the software development lifecycle. The landscape was characterized by a dichotomy between manual and automated testing tools, each playing a crucial role in ensuring software quality. Manual testing, still widely utilized during this period, involved human testers executing test cases based on predefined scenarios. This process allowed for exploratory testing and a deeper understanding of user experiences, yet it was often time-consuming and prone to human error.

On the other hand, automated testing began to gain traction, heralding a notable shift in how testing was approached. With advancements in technology, numerous automated testing tools emerged, designed to facilitate faster and more efficient testing procedures. These tools provided capabilities such as regression testing, load testing, and functional testing, which were essential for maintaining software quality amidst increasingly rapid release cycles. By minimizing manual intervention, automation not only improved accuracy but also enhanced overall productivity, allowing teams to focus on more complex testing scenarios.

This transition from manual to automated testing tools had profound implications for quality assurance teams. The integration of these tools into the development process meant that teams could rely on quicker feedback mechanisms and more robust testing coverage. As organizations strived for agile methodologies, the ability to implement faster automated testing became a driving factor for success. Consequently, 2012 marked a pivotal year in the evolution of testing tools, representing a foundational shift towards a more standardized and efficient testing process that would benefit future software development practices.

Key Automated Testing Tools: HP QuickTest Professional, LoadRunner, and Selenium

In the dynamic landscape of software development, automated testing tools have become essential for ensuring the functionality and performance of applications. In 2012, three prominent tools—HP QuickTest Professional, LoadRunner, and Selenium—emerged as powerful solutions catering to varying testing needs.

HP QuickTest Professional (QTP), known as UFT (Unified Functional Testing) in its later iterations, specializes in functional and regression testing. This tool employs a script-based testing approach using VBScript, enabling testers to create robust test scripts that can be reused and modified easily. The user-friendly interface, combined with its ability to integrate with various development environments, allows for seamless automation of UI testing across different platforms. QTP’s strengths lie in its keyword-driven testing capabilities and the extensive support for object recognition, which greatly enhances the accuracy of test execution.

LoadRunner, developed by Micro Focus, is primarily focused on load testing applications to evaluate performance under various conditions. This tool simulates virtual users and measures the application’s behavior and performance, identifying potential bottlenecks before deployment. LoadRunner supports various scripting languages, including C, Java, and Python, allowing teams to write customized scripts tailored to specific scenarios. Its comprehensive reporting features and detailed analytics provide valuable insights into system performance, making it an invaluable asset for performance testing.

On the other hand, Selenium offers a flexible and open-source solution for automating web applications. It supports multiple programming languages, including Java, C#, and Ruby, thereby catering to a wide range of developer preferences. Selenium’s architecture supports testing across different browsers and platforms, which enhances its versatility. The framework facilitates both functional and regression testing, enabling teams to execute complex test scenarios with ease. With its rich set of features and community support, Selenium stands as a favorite choice for web application automation.

Types of Testing: Functionality, Load, and Security Testing

In 2012, various types of testing emerged as fundamental tools for software quality assurance, each designed to ensure critical aspects of software reliability and performance. Among these, functionality testing, load testing, security testing, stress testing, and volume testing play essential roles in the development lifecycle.

Functionality testing primarily focuses on verifying that the software behaves as the requirements dictate. This type of testing ensures that all features perform correctly and meet user expectations. Testers typically evaluate inputs against expected outputs, examining workflows, error handling, and system interactions to ensure comprehensive coverage. Effective functionality testing is crucial in confirming that applications provide a seamless user experience.

Load testing, on the other hand, assesses an application’s behavior under expected user loads. It identifies how many concurrent users the system can support, evaluating performance metrics such as response times and resource utilization. This type of testing is essential in preemptively identifying potential bottlenecks, ensuring that systems can sustain peak traffic without degradation.

In conjunction with load testing, stress testing examines how systems behave under extreme conditions that surpass normal operational capacity. This testing identifies potential failure points and the limits of functionality by stressing the system’s resources and monitoring performance under these conditions.

Volume testing or data testing evaluates system performance when subjected to varying amounts of data. It is particularly vital for applications that manage large datasets, ensuring that performance remains stable as data volume scales. Additionally, security testing is paramount in identifying vulnerabilities within applications. This ensures that sensitive information is protected against unauthorized access and security breaches. Security testing methodologies often involve automated tools to systematically identify weaknesses in applications.

To effectively track and analyze the results of these testing types, methodologies such as utilizing Excel sheets to record manual test pass/fail scenarios became commonplace in 2012. This documentation plays a critical role in analyzing performance trends and ensuring appropriate measures are taken to underpin software reliability and security.

Manual Testing Strategies and Estimation Techniques

In the realm of software development, manual testing strategies play a critical role, particularly when utilized in conjunction with automated testing tools. While automation enhances efficiency and coverage, manual testing remains imperative for scenarios requiring human intuition and insight. A multitude of strategies can be employed during the manual testing phase to ensure the software meets its intended functionality. One effective approach includes the precise tracking of user interactions, such as mouse clicks, hovers, and keyboard inputs. These actions are essential for verifying that various components and interfaces operate as designed, ultimately safeguarding the user experience.

To maximize the effectiveness of manual testing, it’s essential to establish clear testing parameters. Testers should routinely execute exploratory test cases, which encourage the discovery of edge cases that automated scripts might overlook. Moreover, involving diverse team members in the testing process can foster varied perspectives, aiding in the identification of defects or usability concerns that may arise in different usage contexts. This collaborative effort enhances the overall quality of the final product.

Equally vital to manual testing is the application of estimation techniques during configuration and installation testing. Accurate estimates help in resource allocation and scheduling, ensuring that testing phases adhere to project timelines. Techniques such as kick-off meetings and historical data analysis allow teams to gauge the complexity of testing requirements, leading to more precise estimations of time and effort. Best practices dictate that manual testing efforts should be meticulously documented, enabling better integration with automated processes. This seamless amalgamation not only reduces redundancy but also optimizes testing outcomes, ultimately resulting in a well-rounded quality assurance strategy.