Abstract

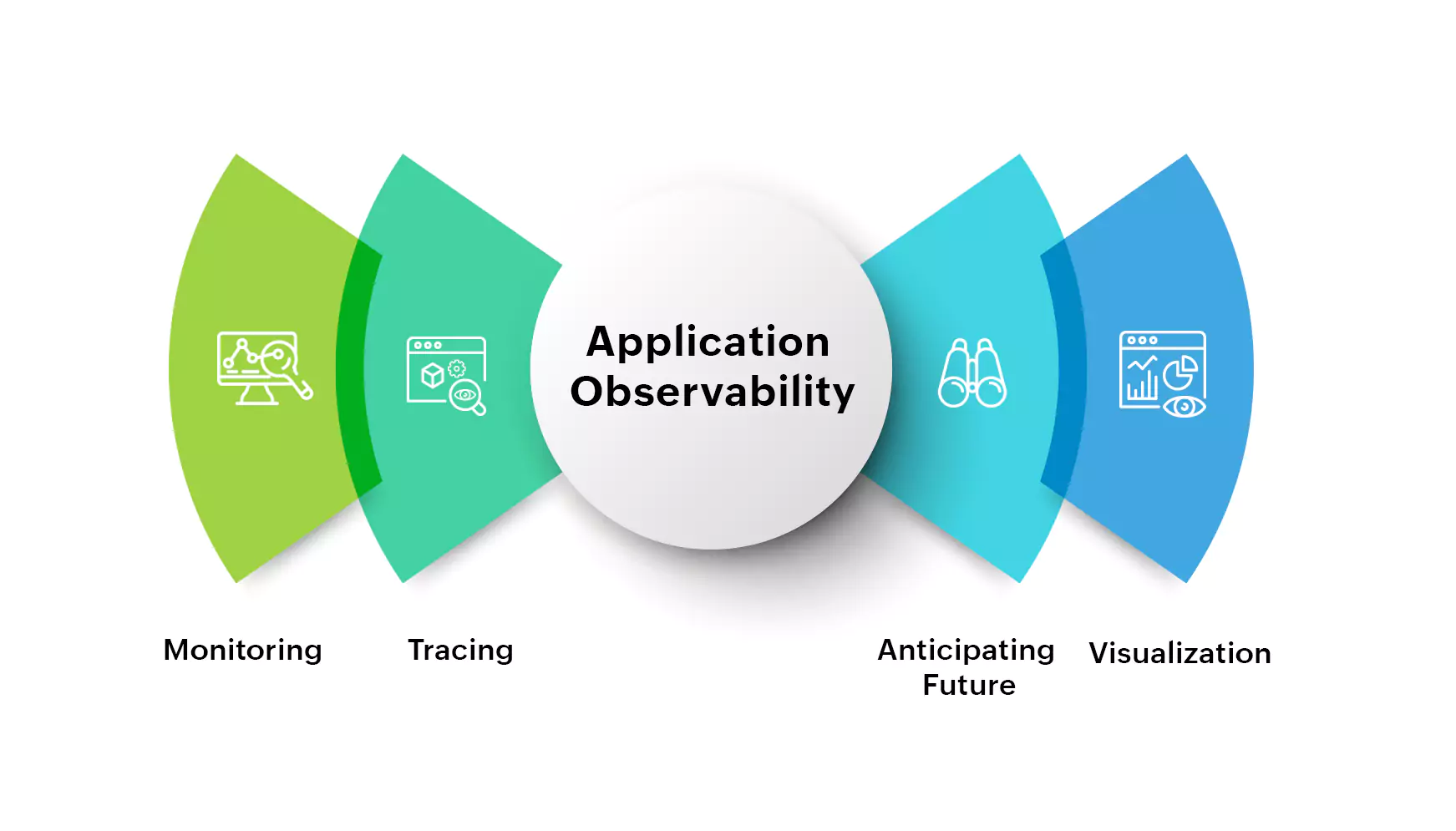

This article provides a comprehensive overview of observability and monitoring concepts, tools, and practices. It covers major observability platforms like Datadog, Grafana, Splunk, and others, as well as key methodologies and frameworks. The pillars of observability – metrics, logs, traces, and events – are explored in depth, along with visualization techniques, alerting strategies, and incident response processes. Machine learning applications in observability and considerations for implementing an effective observability strategy are also discussed.

Introduction

As modern software systems grow increasingly complex and distributed, the ability to understand system behavior and quickly troubleshoot issues has become critical. This is where observability and monitoring come into play. Observability refers to the ability to infer a system’s internal state from its external outputs, while monitoring involves collecting and analyzing data about system performance and health.

This article aims to provide a comprehensive introduction to observability and monitoring concepts, tools, and practices for those new to the field. We will explore major observability platforms, key methodologies, and the core pillars of metrics, logs, traces, and events. Practical implementation guidance and considerations for building an effective observability strategy will also be covered.

Overview of Major Observability Tools and Platforms

Datadog

Datadog is a monitoring and analytics platform for cloud-scale applications. Founded in 2010, Datadog provides observability across the entire technology stack, including infrastructure, application performance, logs, and user experience[1].

Key features:

- Infrastructure monitoring

- Application performance monitoring (APM)

- Log management

- User experience monitoring

- Network performance monitoring

- Security monitoring

Datadog uses a SaaS-based model and provides over 400 built-in integrations with popular technologies. Its unified platform approach allows correlation of metrics, traces, and logs in a single interface.

Grafana

Grafana is an open-source analytics and interactive visualization web application. First released in 2014, Grafana has become one of the most popular open-source dashboarding tools[2].

Key features:

- Metric visualization

- Alerting

- Unified dashboards

- Data source plugins

- Annotation support

While Grafana itself focuses primarily on metrics visualization, the broader Grafana ecosystem includes other observability tools like Loki for logs and Tempo for distributed tracing.

OpenTelemetry

OpenTelemetry is an open-source observability framework for cloud-native software. Launched in 2019, OpenTelemetry aims to provide vendor-neutral APIs, libraries, agents, and instrumentation to facilitate the collection and export of telemetry data[3].

Key components:

- Specification

- SDKs and APIs

- Collector

- Instrumentation libraries

OpenTelemetry is not an observability backend itself, but rather provides a standardized way to collect and transmit observability data to various backends.

Splunk

Splunk is a data platform for searching, monitoring, and analyzing machine-generated big data. Founded in 2003, Splunk has evolved from a log management tool to a comprehensive observability and security platform[4].

Key features:

- Log management and analysis

- Application performance monitoring

- Infrastructure monitoring

- Security information and event management (SIEM)

- IT service intelligence

Splunk offers both on-premises and cloud-based deployments and is known for its powerful search and analytics capabilities across large volumes of data.

Nagios

Nagios is an open-source monitoring system for computer systems, networks, and infrastructure. First released in 1999, Nagios is one of the oldest and most widely used monitoring tools[5].

Key features:

- Network monitoring

- Server and service monitoring

- Application monitoring

- Log monitoring

- Performance graphing

While Nagios Core is open-source, there is also a commercial version called Nagios XI with additional features and a more user-friendly interface.

AppDynamics

AppDynamics, founded in 2008 and acquired by Cisco in 2017, is an application performance management (APM) and IT operations analytics (ITOA) company[6].

Key features:

- Application performance monitoring

- End-user monitoring

- Infrastructure visibility

- Business performance monitoring

- AIOps

AppDynamics focuses on providing deep visibility into application performance and its impact on business outcomes.

Thanos

Thanos is an open-source project that extends Prometheus’s capabilities with long-term storage, high availability, and global query view. It was first released in 2018[7].

Key features:

- Global query view

- Unlimited retention

- Downsampling and compaction

- Deduplication

- Backup capabilities

Thanos is often used in conjunction with Prometheus to address some of Prometheus’s limitations in large-scale deployments.

Prometheus

Prometheus is an open-source monitoring and alerting toolkit. First released in 2012, Prometheus has become one of the most popular monitoring solutions, especially in cloud-native environments[8].

Key features:

- Multidimensional data model

- Flexible query language (PromQL)

- Pull-based metrics collection

- Service discovery

- Alerting

Prometheus is often used for metrics collection and alerting, while other tools may be used for logs and traces.

Elastic (Elasticsearch)

Elasticsearch is a distributed, RESTful search and analytics engine. First released in 2010, Elasticsearch forms the core of the Elastic Stack (formerly known as the ELK Stack)[9].

Key features:

- Full-text search

- Log and event data analysis

- Application performance monitoring

- Infrastructure monitoring

- Security information and event management (SIEM)

While Elasticsearch started as a search engine, it has evolved into a comprehensive observability and analytics platform when combined with other components of the Elastic Stack like Logstash and Kibana.

Pillars of Observability

Observability is typically built on four main pillars: metrics, logs, traces, and events. Each of these provides a different perspective on system behavior and performance.

Metrics

Metrics are numerical measurements of system behavior over time. They provide a high-level view of system performance and health.

Types of metrics:

- Counters: Cumulative measurements that only increase (e.g., total requests)

- Gauges: Measurements that can increase or decrease (e.g., current CPU usage)

- Histograms: Measurements that sample observations and count them in configurable buckets

- Summaries: Similar to histograms, but can calculate quantiles over a sliding time window

The Four Golden Signals, as defined by Google’s Site Reliability Engineering book, are key metrics for monitoring distributed systems:

- Latency: Time taken to serve a request

- Traffic: Amount of demand on the system

- Errors: Rate of requests that fail

- Saturation: How “full” the service is

When choosing metrics, consider the following characteristics:

- Understandable: The metric should be easily interpreted

- Actionable: It should be clear what action to take based on the metric

- Improvable: There should be a way to influence the metric

- Multidimensional: The metric should provide context through labels or tags

Logs

Logs are timestamped records of discrete events that happened in the system. They provide detailed information about specific occurrences.

Best practices for logging:

- Use structured logging: Include metadata in a machine-parseable format

- Log at appropriate levels: Use debug, info, warn, error levels judiciously

- Include context: Add relevant details like request IDs, user IDs, etc.

- Be consistent: Use a standard format across your applications

Log management involves collecting, centralizing, and analyzing logs. Tools like Loki (part of the Grafana ecosystem) or the ELK stack (Elasticsearch, Logstash, Kibana) are commonly used for log management.

Traces

Traces provide visibility into the path of a request as it propagates through a distributed system. They are particularly useful for understanding performance in microservices architectures.

Key concepts in tracing:

- Spans: Represent a unit of work in a trace

- Trace ID: Unique identifier for a trace that connects all its spans

- Parent-child relationships: Show how spans are related within a trace

OpenTelemetry provides a standardized way to instrument applications for distributed tracing. Visualization tools like Jaeger or Tempo (part of the Grafana ecosystem) can be used to analyze traces.

Events

Events are discrete occurrences that represent a significant change in the system. Unlike logs, which are continuous, events are typically used to capture important state changes or incidents.

Types of events:

- System events: Changes in system state (e.g., service start/stop)

- Business events: Significant occurrences from a business perspective (e.g., order placed)

- Security events: Security-related occurrences (e.g., failed login attempts)

Event correlation and analysis can provide insights into system behavior and help in root cause analysis. Tools like Moogsoft use machine learning for event correlation and anomaly detection.

Implementing Observability

Instrumentation

Instrumentation is the process of adding code to your application to collect observability data. This can be done manually or through automatic instrumentation provided by observability tools.

OpenTelemetry provides a standardized way to instrument applications for metrics, logs, and traces. Many observability platforms also provide their own SDKs and agents for instrumentation.

Data Collection and Storage

Once instrumented, data needs to be collected and stored. This typically involves:

- Agents or collectors that gather data from various sources

- A central repository or database for storing the data

- Data processing pipelines for aggregation, filtering, and enrichment

Different tools have different approaches. For example:

- Prometheus uses a pull-based model where it scrapes metrics from instrumented targets

- Datadog uses agents installed on hosts to collect and send data to its SaaS platform

- The ELK stack uses Logstash or Beats to collect and send data to Elasticsearch

Visualization and Dashboards

Effective visualization is crucial for making sense of observability data. Tools like Grafana provide flexible dashboarding capabilities, allowing you to create custom views of your metrics, logs, and traces.

Best practices for dashboards:

- Focus on key metrics that provide actionable insights

- Use appropriate chart types for different kinds of data

- Provide context through annotations and variable time ranges

- Design for different audiences (e.g., developers, operations, business stakeholders)

Alerting

Alerting is the process of notifying relevant personnel when certain conditions are met. Effective alerting is critical for timely incident response.

Key considerations for alerting:

- Define clear thresholds based on SLOs (Service Level Objectives)

- Use multi-step alerts to reduce noise (e.g., warning followed by critical)

- Provide context in alert notifications to aid in quick diagnosis

- Implement escalation policies for unacknowledged alerts

Tools like PagerDuty or Opsgenie are often used in conjunction with observability platforms for alert management and on-call scheduling.

Advanced Topics in Observability

Machine Learning and AI in Observability

Machine learning and AI are increasingly being applied in observability to provide more intelligent insights and automate certain tasks.

Applications of ML/AI in observability:

- Anomaly detection: Identifying unusual patterns in metrics or logs

- Root cause analysis: Suggesting potential causes for observed issues

- Predictive maintenance: Forecasting potential issues before they occur

- Automated remediation: Taking automatic actions to resolve common issues

Tools like Datadog and Splunk incorporate machine learning capabilities into their platforms to provide these advanced features.

Observability in Kubernetes and Cloud-Native Environments

Cloud-native environments present unique challenges for observability due to their dynamic and distributed nature.

Key considerations for Kubernetes observability:

- Collecting metrics from multiple layers (infrastructure, Kubernetes, applications)

- Handling high cardinality data due to the large number of objects and labels

- Tracing requests across multiple microservices

- Managing short-lived containers and serverless functions

Tools like Prometheus and Grafana are popular choices for Kubernetes observability, often deployed using the kube-prometheus-stack Helm chart.

Continuous Improvement and SRE Practices

Observability is not a one-time setup but a continuous process of improvement. Site Reliability Engineering (SRE) practices provide a framework for this ongoing refinement.

Key SRE practices related to observability:

- Defining and tracking Service Level Indicators (SLIs) and Objectives (SLOs)

- Implementing error budgets to balance reliability and innovation

- Conducting blameless postmortems after incidents to drive improvements

- Using toil analysis to identify and automate repetitive operational work

Challenges and Considerations

Data Volume and Cost

As systems grow, the volume of observability data can become overwhelming, leading to significant storage and processing costs.

Strategies for managing data volume:

- Implement data retention policies

- Use sampling for high-volume data (e.g., traces)

- Aggregate data at different resolutions (e.g., raw data for recent history, aggregated data for long-term storage)

Tool Sprawl and Integration

With the proliferation of observability tools, many organizations face challenges with tool sprawl and integration.

Approaches to address this:

- Adopt platforms that cover multiple observability pillars (e.g., Datadog, Splunk)

- Use OpenTelemetry for standardized instrumentation across different backends

- Implement a central observability portal or “single pane of glass” view

Privacy and Security

Observability data often contains sensitive information, raising privacy and security concerns.

Key considerations:

- Implement data masking for sensitive fields

- Ensure secure transmission and storage of observability data

- Implement access controls and audit logging for observability platforms

- Comply with relevant regulations (e.g., GDPR, HIPAA)

Future Trends in Observability

AIOps and Automated Remediation

As AI and machine learning capabilities advance, we can expect to see more automated analysis and remediation of issues based on observability data.

Observability-Driven Development

Observability is likely to become an integral part of the development process, with developers considering observability requirements from the outset.

Edge and IoT Observability

As edge computing and IoT deployments grow, observability solutions will need to adapt to handle the unique challenges of these environments, such as limited connectivity and resource constraints.

Unified Observability Platforms

We may see further consolidation in the observability market, with platforms offering more comprehensive coverage across metrics, logs, traces, and other telemetry data.

Conclusion

Observability has become a critical practice for managing modern, complex systems. By leveraging the pillars of metrics, logs, traces, and events, and utilizing advanced tools and techniques, organizations can gain deep insights into their systems’ behavior and performance.

As the field continues to evolve, staying informed about new tools, best practices, and emerging trends will be crucial for maintaining effective observability strategies. Whether you’re just starting out or looking to enhance your existing observability practices, the concepts and tools discussed in this article provide a solid foundation for your journey.

References

[1] Datadog. (n.d.). About Us. https://www.datadoghq.com/about/

[2] Grafana Labs. (n.d.). About Grafana. https://grafana.com/about/

[3] OpenTelemetry. (n.d.). About OpenTelemetry. https://opentelemetry.io/about/

[4] Splunk. (n.d.). About Splunk. https://www.splunk.com/en_us/about-splunk.html

[5] Nagios. (n.d.). About Nagios. https://www.nagios.org/about/

[6] AppDynamics. (n.d.). About Us. https://www.appdynamics.com/company/about-us

[7] Thanos. (n.d.). Overview. https://thanos.io/tip/thanos/quick-tutorial.md/

[8] Prometheus. (n.d.). Overview. https://prometheus.io/docs/introduction/overview/

[9] Elastic. (n.d.). About Us. https://www.elastic.co/about/