Abstract

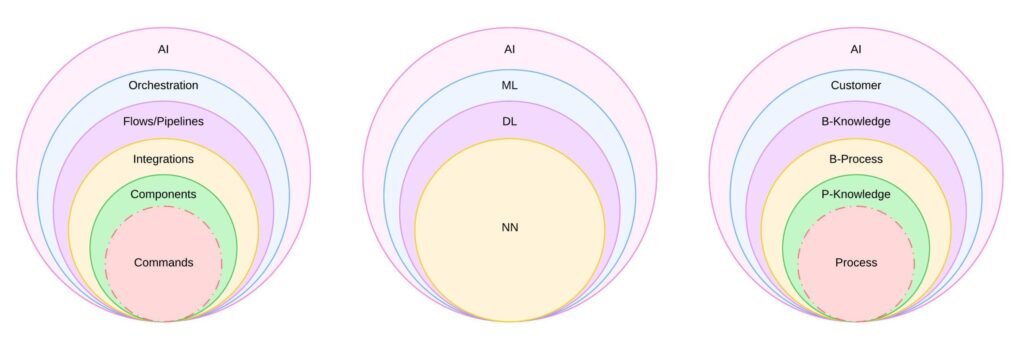

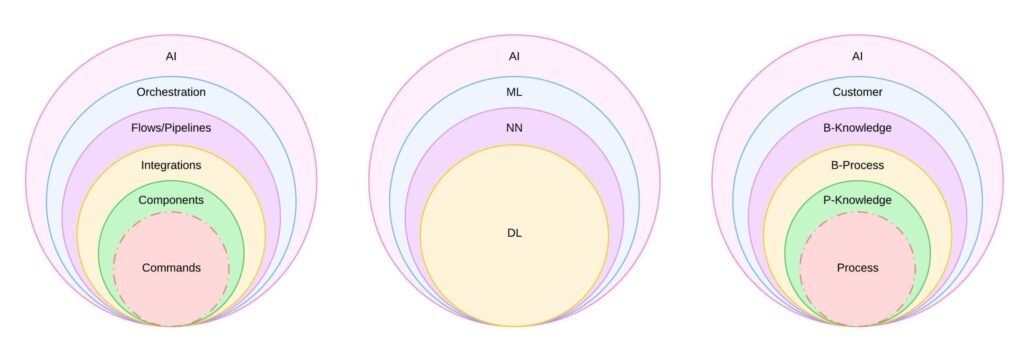

Automation has undergone transformative changes since its inception, driven by the need for efficiency, scalability, and real-time data processing. This article examines the historical progression of automation technologies, beginning with manual command-line executions and evolving into AI-driven orchestration systems. Each phase—scripting, reusable components, API integrations, CI/CD pipelines, and AI/ML—has contributed to accelerating data flow between systems, enabling faster decision-making and innovation. By analyzing these milestones, this article highlights how automation has become a cornerstone of modern business processes and explores future trends poised to redefine data velocity.

Keywords: Automation, Data Velocity, AI/ML, CI/CD, API Integrations, Orchestration

Introduction

Automation has revolutionized how organizations process information, enabling unprecedented speed and accuracy eliminating manual intervention, optimizing workflows, and enabling seamless data exchange. From early command-line interfaces to AI-driven neural networks, each technological advancement has addressed limitations in scalability, interoperability (cross-platform data flows), and complexity with minimal human input. This article explores how automation’s iterative improvements—scripting, modular components, APIs, and orchestration frameworks—have collectively accelerated data velocity, laying the groundwork for today’s real-time decision-making ecosystems.

Early Automation: Command-Line Executions and Scripts

The Dawn of Manual Commands

The roots of automation lie in command-line executions, where users manually input instructions to retrieve system information. In the mid-20th century, operators relied on command-line interfaces (CLIs) to query system processes or hardware status. Early operating systems like Unix (developed in the 1970s) introduced shells (e.g., the Bourne shell) that allowed users to execute commands for file management, process monitoring, and system diagnostics (Firgelliauto, 2023). Commands such as ls (list files), grep (search text), ps (process status) and top (to monitor resource usage) became foundational tools for administrators.

While CLIs provided granular control, they required repetitive manual input, leading to inefficiencies. For example, system administrators had to memorize syntax, repeatedly execute commands to monitor server health, resulting in delays and human error (Brito, 2022).

Scripting: The First Leap Toward Reproducibility

The 1970s–1980s saw the rise of scripting languages like Bash, Perl and Python, which automated repetitive CLI tasks. Scripts bundled commands into reusable sequences, reducing human error and enabling batch processing[2][16]. For instance, a script could automatically log system metrics or deploy code across servers. This era marked the conceptual birth of “automation” as a means to minimize manual effort.

Example Script (Bash):

#!/bin/bash

# Monitor CPU usage

top -n 1 | grep "Cpu" >> /var/log/system_metrics.log #!/bin/bash

# Automate Log File Cleanup

find /var/log -name "*.log" -type f -mtime +7 -exec rm {} \; Scripting enabled batch processing and scheduled tasks (e.g., cron jobs), accelerating data workflows. However, scripts were often rigid, requiring modifications for new use cases (Kalil, 2024).

Reusable Components and Workflow Automation

Modularization: Subroutines and Libraries

Reusable components reduced redundancy and improved maintainability. A study by HubSpot (2024) found that organizations using modular automation reduced development time by 40%. By the 1990s, developers began modularizing scripts into reusable functions (e.g., Python’s subprocess module). Subroutines allowed teams to standardize tasks like file transfers or log parsing, fostering consistency across projects[7][15].

Workflow Engines and GUI-Based Tools

Graphical user interface (GUI) tools like IBM’s AutoMate (1990s) and later ServiceNow’s Flow Designer (2010s) enabled drag-and-drop workflow creation. Platforms like LEAPWORK allowed users to drag-and-drop components, such as authentication modules or API calls, into workflows (LEAPWORK, 2024). For example, a “User Login” sub-flow could be reused across multiple workflows. These platforms abstracted scripting complexities, allowing non-technical users to chain predefined actions (e.g., “Send Email” or “Update Database”) into multi-step processes[3][9].

Key Innovations:

- PLCs (1960s): Programmable logic controllers automated industrial assembly lines, replacing hardwired relays[1][3].

- SCADA Systems (1980s): Supervisory control systems centralized monitoring of distributed sensors, enabling predictive maintenance[7][15].

System Integrations and API-Driven Automation

The Rise of Web Services

The 2000s introduced REST and SOAP APIs, standardizing system-to-system communication. APIs allowed applications to exchange data via HTTP methods and JSON/XML payloads, with authentication mechanisms like JWT tokens ensuring security[6][11][27]. API’s became the standard for integrating cloud services (SnapLogic, 2024). For example, Oracle’s HCM Cloud adopted JWT for API authentication, enabling seamless integration with third-party payroll systems[11].

API Authentication Workflow (JWT):

- Client generates a JWT signed with a private key.

- Server validates the token’s signature using a public key.

- Access is granted if the token’s claims (e.g., user role) match endpoint requirements[6][27].

Salesforce’s REST API allows fetching customer data with a token-authenticated request:

GET /services/data/v50.0/contacts

Authorization: Bearer <token> Middleware and ETL Tools

Tools like Apache NiFi (2010s) emerged to automate data pipelines, offering prebuilt connectors for databases, cloud platforms, and IoT devices[4][10]. NiFi’s visual interface simplified tasks like real-time data ingestion, transformation, and routing, reducing reliance on custom scripts[10][33].

Comparison of Data Integration Tools:

| Tool | Key Feature | Use Case |

|---|---|---|

| Apache NiFi | Real-time data provenance tracking | IoT sensor networks[10][33] |

| Google Dataflow | Auto-scaling batch/stream processing | BigQuery analytics[4][9] |

| Airbyte | 550+ prebuilt connectors | Multi-cloud synchronization[9] |

Security Protocols

API security evolved with standards like OAuth 2.0 and JWT (JSON Web Tokens), ensuring secure data exchange. Tokens granted temporary access, reducing vulnerabilities (TechTarget, 2025).

Orchestration Services and CI/CD Pipelines

DevOps and Continuous Integration

CI/CD pipelines (e.g., Jenkins, GitLab CI) automated software deployment, testing, and monitoring, linking version control, build systems, and cloud platforms. Pipelines ensured code changes were seamlessly integrated and deployed, minimizing downtime (TechTarget, 2024). For example, a pipeline could trigger automated tests upon a Git commit, then deploy to AWS if successful[9][13].

CI/CD Pipeline Stages:

- Code Commit: Developer pushes changes to Git.

- Build: Compile code into executable artifacts.

- Test: Run unit/integration tests.

- Deploy: Release to staging/production environments[9].

Impact on Data Velocity:

CI/CD reduced deployment cycles from weeks to hours, enabling faster iteration and real-time feedback (Prefect, 2022).

Orchestration: Coordinating Complex Workflows

Tools like Kubernetes and Airflow:

Orchestration frameworks managed multi-step workflows across distributed systems. Kubernetes automated containerized application scaling, while Apache Airflow scheduled data pipelines (IBM, 2024).

Business Process Orchestration:

Low-code platforms like ServiceNow’s Workflow Engine (2010s) enabled business users to design approval workflows or incident escalations without coding. ServiceNow’s Flow Designer enabled end-to-end process automation, combining approvals, notifications, and integrations into a single workflow (ServiceNow, 2024). These tools abstracted underlying APIs, allowing drag-and-drop integration with HR, IT, and finance systems[9][13].

AI-Driven Automation: The Cognitive Era

Machine Learning in Automation

Modern AI frameworks like TensorFlow and PyTorch enable systems to learn from historical data. For example – AI models can predict server failures by analyzing log patterns or optimize supply chains using real-time sales data[1][12], OpenAI’s Codex translates natural language into shell commands or API calls (McKinsey, 2023). AI-powered chatbots like Amtrak’s “Julie” handle 5 million queries annually, integrating with backend APIs to provide real-time booking updates (Mize, 2023).

AI Automation Workflow:

- Data Ingestion: Collect metrics from APIs, logs, and sensors.

- Model Training: Train a neural network on failure patterns.

- Inference: Deploy the model to monitor systems proactively.

- Self-Healing: Trigger scripts to restart services or scale resources[12][15].

Generative AI and Code Synthesis

Tools like GitHub Copilot (2020s) leverage large language models (LLMs) to generate scripts by intertwining APIs, SQL queries, and CI/CD configurations. For instance, an LLM might auto-generate a Python script to synchronize Salesforce data with Snowflake using Airbyte connectors[9][12].

The Demand for Real-Time Data and Emerging Tools

Streaming Technologies

Apache Kafka (2010s) and AWS Kinesis (2020s) revolutionized real-time data processing, enabling applications like fraud detection and live dashboards. These platforms ingest millions of events per second, processing them in-memory for sub-millisecond latency[33][36].

Edge AI

Edge devices with embedded AI (e.g., IoT sensors) now preprocess data locally, reducing cloud dependency, latency, bandwidth use(Honeywell, 2024). Coupled with 5G’s low latency, this enables real-time analytics in autonomous vehicles and smart factories[1][30].

Future Trends and Predictions

- Quantum Automation: Quantum algorithms could optimize logistics routes or encryption keys exponentially faster than classical systems[1][15]. Quantum computing promises exponential speedups in data processing, enabling real-time analytics for industries like finance and healthcare (CBRE, 2023).

- Self-Orchestrating AI: Models like GPT-5 may design entire automation pipelines by interpreting natural language requests (e.g., “Build a inventory restocking workflow”)[12].

- Ethical AI Governance: Frameworks to audit AI-driven decisions for bias, ensuring compliance with regulations like GDPR[12][27].

Conclusion

From rudimentary scripts to AI orchestrators, automation has relentlessly accelerated data velocity, empowering industries to act on insights milliseconds after generation. Each leap—whether APIs, CI/CD, or neural networks—has dissolved bottlenecks, enabling seamless interoperability. As quantum computing, edge AI and ethical AI mature, the next frontier will prioritize not just speed, but self-optimization, trust and adaptability in autonomous systems. Organizations that leverage these advancements will dominate in an era where data velocity dictates competitiveness.

References

- Squirro. (2024). The History and Evolution of Automation. https://squirro.com

- Luhhu. (2023). The Evolution of Automation. https://luhhu.com

- Kalil, M. (2024). History of Industrial Automation. https://mikekalil.com

- Matillion. (2025). Data Integration Tools. https://matillion.com

- Oracle. (2023). JWT Authentication. https://oracle.com

- Airbyte. (2025). Data Transfer Tools. https://airbyte.com

- Akrity. (2021). Real-Time Data Tools. https://akrity.com

- ThinkAutomation. (n.d.). History of AI. https://thinkautomation.com

- Sanka. (2023). Automation Evolution. https://sanka.io

- Auth0. (n.d.). REST vs SOAP. https://auth0.com

- Airbyte. (2024). Real-Time Data Processing. https://airbyte.com

- Firgelliauto. (2023). What is the evolution and history of Automation? https://www.firgelliauto.com